Motivation

In August, 2003, a large-scale blackout affected the northeast of the U.S. and adjoining parts of Canada. Roughly 50 million people lost power for several days; the resulting human and economic impact was substantial. Had the blackout lasted longer, the impact would have been severe.

Similar national-scale blackouts have occurred worldwide: Switzerland-France-Italy (September 2003, 57 million people affected during roughly one day); Brazil (1999, 75 million people affected for many hours). Recently, the San Diego blackout affected roughly 3 million people.

Why?

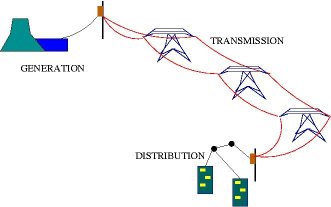

The average person might feel that such large blackout events are due to excessive demand for power (or, equivalently, insufficient generation capacity). The reality is that such a simple explanation is fundamentally wrong; as it turns out, the blackouts were caused by inadequacies in national transmission networks. Modern power networks have a three-tier structure:

Modern transmission networks are large and complex. They comprise a large number of power lines (numbering in the thousands) which carry very large amounts of power over very long distances. The operation of transmission networks is essentially complex. We expand on this below. In particular, the direct (enumerational) study of all possible fault contingencies is computationally infeasible. Following the 2003 North American blackout, the U.S.-Canada task force charged with studying the causes of the blackout (report available from here) stated that the leading cause of the blackout was "inadequate system understanding".

How?

Given a power network, its operational

characteristics (power flows, voltages, etc.) are determined by the laws of physics, rather than

through direct control. What this means is that given a network,

a computation is required to determine the power flows. The

computation is complex if a fairly accurate model of power flows is

being used; even if a (linearized) simplification is used the

computation requires the solution of a linear system of equations.

The behavior of a power network can best be described as non-monotone; in simplified terms we might say that the behavior can be counterintuitive. A consequence of this fact is that when an existing network is modified, even experienced engineers have to resort to computational software to determine the behavior of the network. This can prove a time-consuming task.

In order to understand how a large-scale blackout can occur, we have to put two factors into perspective: (1) externally caused fault events, and (2) power line "limits'. First, transmission networks are subject to a number of externally-caused power line failures. For example, such failures could be caused by fires, lightning, and so on. It is not uncommon for single failures to happen; less commonly we might have four or five such failures happening within a relatively short span of time (say, a few hours). Second, the power lines in a network have a "rating" or "limit". This limit is not a true "capacity": it may be exceeded. However, when power flowing on a line (significantly) exceeds its limit, then that line, left unattended, could overheat and experience mechanical damage, or otherwise may impede the functioning of the grid. [The precise technical explanation exceeds the scope of this writeup] This is a process that may require time on the order of minutes. In practice protection equipment will quickly disable theline.

Now we can analyze the two factors jointly. Suppose that a number of externally caused failures happen. Then, effectively, we are operating a smaller network, the residual network. The laws of physics take over: a new set of power flows will take hold (the change occurs quite rapidly, e.g. at the speed of light). This new set of power flows, unfortunately, may exceed the limits of some of the surviving lines -- with the result that these lines will be taken out of operation. Then we will have an even smaller network.

Essentially what we have then is a vicious cycle. Occasionally, this may lead to a catastrophic cascade, rendering the network inoperable: either a substantial fraction of the demand will be unserviceable, or resulting power surges could damage generators or other equipment -- in this case the problem is "solved" by disabling large portions of the demand. This is how a blackout happens.

Once the blackout has started, power cannot simply be turned back on (or the cycle will resume). The repair process is time consuming and presents its own computational challenges.

Analysis of real blackout data, and that obtained from simulations, shows that "cascades" live up to their name: lines are disabled at an accelerated rate as we approach the final stage of the cascade. This fact has been used to argue against centralized real-time control. However, it is worth noting that even if centralization of information were possible, the necessarily methodological tools or computational resources need to successfully halt a cascade do not exist.

The behavior of a power network can best be described as non-monotone; in simplified terms we might say that the behavior can be counterintuitive. A consequence of this fact is that when an existing network is modified, even experienced engineers have to resort to computational software to determine the behavior of the network. This can prove a time-consuming task.

In order to understand how a large-scale blackout can occur, we have to put two factors into perspective: (1) externally caused fault events, and (2) power line "limits'. First, transmission networks are subject to a number of externally-caused power line failures. For example, such failures could be caused by fires, lightning, and so on. It is not uncommon for single failures to happen; less commonly we might have four or five such failures happening within a relatively short span of time (say, a few hours). Second, the power lines in a network have a "rating" or "limit". This limit is not a true "capacity": it may be exceeded. However, when power flowing on a line (significantly) exceeds its limit, then that line, left unattended, could overheat and experience mechanical damage, or otherwise may impede the functioning of the grid. [The precise technical explanation exceeds the scope of this writeup] This is a process that may require time on the order of minutes. In practice protection equipment will quickly disable theline.

Now we can analyze the two factors jointly. Suppose that a number of externally caused failures happen. Then, effectively, we are operating a smaller network, the residual network. The laws of physics take over: a new set of power flows will take hold (the change occurs quite rapidly, e.g. at the speed of light). This new set of power flows, unfortunately, may exceed the limits of some of the surviving lines -- with the result that these lines will be taken out of operation. Then we will have an even smaller network.

Essentially what we have then is a vicious cycle. Occasionally, this may lead to a catastrophic cascade, rendering the network inoperable: either a substantial fraction of the demand will be unserviceable, or resulting power surges could damage generators or other equipment -- in this case the problem is "solved" by disabling large portions of the demand. This is how a blackout happens.

Once the blackout has started, power cannot simply be turned back on (or the cycle will resume). The repair process is time consuming and presents its own computational challenges.

Analysis of real blackout data, and that obtained from simulations, shows that "cascades" live up to their name: lines are disabled at an accelerated rate as we approach the final stage of the cascade. This fact has been used to argue against centralized real-time control. However, it is worth noting that even if centralization of information were possible, the necessarily methodological tools or computational resources need to successfully halt a cascade do not exist.

What we are doing

Our current work focuses on two distinct areas:

- Developing online control algorithms for mitigating

cascading blackouts. We assume a slow-moving cascade so that

centralized computation is possible immediately after the start of the

cascade. A control algorithm is then computed; the control will

be applied as the cascade unfolds. The controls we have in mind

shed demand as a function of real-time observations, with the objective

of maximizing the amount of surviving demand when the cascade

ends. Our initial work is reported here: Optimal

adaptive control of cascading power grid failures. Also see:

Optimal control of cascading power grid failures, Proc. 2011 IEEE

CDC-ECC

Animation, here. Software, here (instructions available from author). This work is now funded by DTRA grant HDTRA1-13-1-0021. - Diagnosing complex contingencies in grids, using AC power

flow models (joint work with S. Barnett, PhD student., Applied

Math) We assume a fictitious attacker who can increase (in

particular) the reactance of power lines, within bounds and within a

total budget. The goal of the attacker is to maximize the total

"voltage loss" in the grid. Our initial experiments indicate that

in the solution to this problem the optimal budget allocation is

focused on a small number of lines. We can interpret this set of

lines as constituting a vulnerability (as large reactance tends to

inhibit flow).

- In joint work with colleagues from Columbia, MIT and other institutions,

we are developing methodologies for investigating the impact of geographically

correlated faults. Examples include earthquakes, EMP events (such as those

arising from solar flares) and large forest fires. Such events could

potentially cause significant, localized damage to the power grid, potentially

developing into a cascade. Some initial work in this direction is described

in this report. A

related topic is the impact that such a cascading failure could cause on

dependent networks, such as data networks.

The second topic above is related to previous work. In D. Bienstock and A. Verma, The N-k problem in power grids: new models, formulations and computation (SIOPT 20 (2010), 2352-2380) we considered a similar problem on DC power flows. Here the goal of the attacker was to maximize the

maximum line overload. We found that the optimal attacker action (mostly) focused on a few lines, thus indicating a contingency. This algorithm scaled work up to grids with approximately 1000 lines, and was the forerunner of the work described above.

The main motivation for this line work is the so-called "N-k problem". Suppose that the number of "external fault events" that a network could be subjected to were fairly small. Then we could enumerate all of these events and engineer the network so as to withstand all of them. But the reality is that the number of such events is not small (even if polynomially bounded); in fact it is astronomically large. To put it differently, we must deal with combinations of individual, externally caused single-line failures. What is worse, such combinations are extremely rare. In summary, we must handle a combinatorial explosion of unlikely (unlucky) events. A straightforward combinatorial approach to this problem does not seem to work -- hence our "continuous attacker" method.

Previous work focused on how to prudently invest on an existing network so as to increase the likelihood of its successfully "riding out" a failure cascade, without real-time intervention. This work has had several directions. In one model, we consider how to selectively add to the capacity of existing power lines, so that in every possible fault scenario the resulting failure cascade is immediately arrested (no further outages). This constitutes a conservative model, but with the advantage that it can be tackled using standard optimization techniques.

In another model, we extend and adapt a model initially developed by Carreras, Lynch, Sachtjen, Dobson and Newman. Here, we do not require that each potential cascade be immediately stopped -- rather, we allow a "cycle of failures" to occur, but instead we require that the network eventually become stabilized without requiring large loss of demand.

This work was reported in D. Bienstock and S. Mattia, Using mixed-integer programming to solve power grid blackout problems, Discrete Optimization 4 (2007), 115-141.